What is a Neuron?

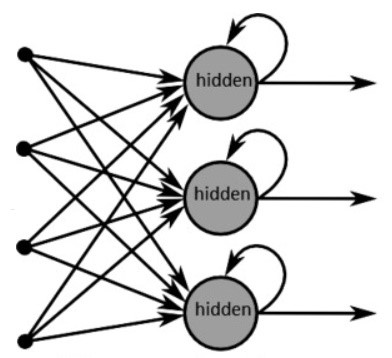

In Biological term, Neurons is the unit of the nervous system which is responsible for the flow of message in the form of electrical impulse in the human brain. So, Neuron is responsible for Human intelligence. But, in Today’s scenario, it is used in Artificial Intelligence as well. Recurrent Neural Network (RNN) is a class of artificial neural network in which connections between the neurons form a directed graph, or in simpler words, having a self-loop in the hidden layers. This helps RNNs to utilize the previous state of the hidden neurons to learn the current state. Along with the current input, RNNs utilize the information they have learned previously. Among all the neural networks, they are the only ones with internal memory. A usual RNN has a short-term memory. Because of their internal memory, RNN is able to remember things.

How Recurrent Neural Network work?

RNN is a preferred algorithm for sequential data like time series, speech, text, financial data, audio, video, weather, and much more because they can easily form an understanding and identify trends of a sequence and its context, compared to other algorithms. But, What is the Sequential data? Basically, it is the ordered data, where related things follow each other. Examples are financial data or the DNA sequence. The most popular type of sequential data is perhaps Time series data, which is just a series of data points that are listed in time order.

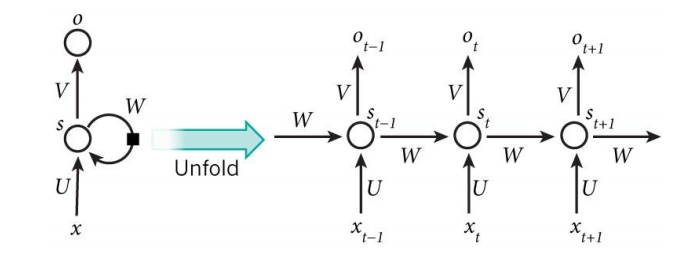

In an RNN, the information cycles through a loop. When it makes a decision, it takes into consideration the current input and also what it has learned from the inputs it received previously. Recurrent Neural Networks add the immediate past to the present.

As you can see in the figure, Previous State, s(t-1) acts as an input for Present State, s(t). Therefore, State, s(t) has 2 inputs x(t) and its previous State, s(t-1). Further, s(t) is propagated to s(t+1). Therefore, a Recurrent Neural Network has two inputs, the present, and the recent past. This is important because the sequence of data contains crucial information about what is coming next, that is what RNN can memorize things and use them for the future.

Recurrent Neural Network with Long Short-Term Memory

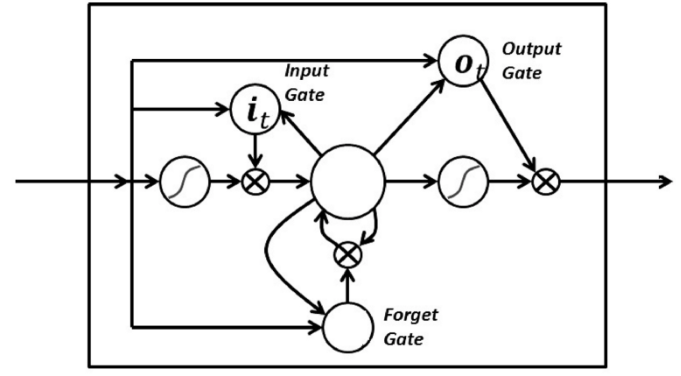

There are many issues arise with RNN like it has very small memory to store and Vanishing Gradient is the major problem in which the values of a gradient(partial derivative with respect to its inputs/or it is a measure how much the output of a function changes if you change the inputs a little bit) are too small and the model stops learning or takes way too long because of that. This problem is solved through the concept of LSTM by Sepp Hochreiter and Juergen Schmidhuber. Long Short-Term Memory (LSTM) basically extends the memory of RNN. In LSTM, there are 3 gates:

- Input Gate: Determine whether or not to let new input in.

- Forget Gate: Delete the information which is not important or to let it impact the output at the current time step.

- Output Gate: Gives the output.

These 3 Gates are responsible to decide that information is important or can be eliminated. Hence, Increase the storage.

Vanishing gradient is solved through LSTM because it keeps the gradients steep enough so that it not Vanishes and therefore the training relatively short with high accuracy.

Contact Mirketa to leverage our Elixir platform for solving your problems using the right artificial intelligence approach.

Leave A Comment